Private AI is quietly challenging the assumption that intelligence must come at the cost of data privacy. However, public AI is still booming, but at what cost?

From ChatGPT to Copilot, generative AI tools have exploded in popularity, activating the full potential of AI. Their scope seemingly covers every area, from writing to customer support.

But as these tools continue to dominate the mainstream, privacy concerns are catching up. When you enter a prompt, share a document, or fine-tune a model, where exactly does your data go?

For privacy-conscious individuals and enterprises, this tradeoff isn’t theoretical. The idea that AI models might reuse prompts, leak proprietary data, or violate regulatory compliance has shifted the conversation toward private and secure alternatives.

Private AI and Confidential AI are two approaches that prioritize privacy by design. Moreover, they show a future where AI models can be powerful without compromising privacy. And thanks to new decentralized infrastructure from platforms like iExec, privacy-first solutions are live, scalable, and ready to deploy.

Private AI refers to artificial intelligence systems and workflows designed to preserve user and enterprise data privacy by default. These systems operate under the assumption that all data involving training data, inference inputs, and model outputs should remain within a trusted, controlled environment.

Key technologies that support Private AI include:

This approach is especially relevant in regulated industries like healthcare and finance, where data cannot be transferred to third-party services without strict compliance.

Using public AI is convenient, but with increased use come increased risks. These AI technologies typically rely on datasets that are publicly available, crowd-sourced, or scraped. Users feed in sensitive data, and those inputs might stick around longer than expected.

This becomes a problem when that same AI starts surfacing confidential data, prompts, or code snippets in unrelated outputs. Data reuse is a common issue, and many platforms offer no guarantees that user inputs won’t train future models.

Worse, these models often run in public cloud environments with minimal runtime protections. Enterprises that care about regulatory compliance (i.e. HIPAA, GDPR, or internal policies) are stuck between two bad choices: use non-confidential AI and risk exposure, or skip AI adoption entirely. Neither scales.

And while large language models might deliver strong performance, these leaks aren’t delivering peace of mind. This is the privacy paradox: everyone’s using open-access AI, everyone’s feeding it personal data, but few trust it.

Private AI solutions are a huge leap forward in the need for privacy. They keep sensitive information close, often inside private data centers, and give enterprises more control over data. But when it comes to truly replacing public AI tools, while maintaining performance, usability, and data privacy. Private AI hits a wall.

Despite being trained on private data and running within trusted environments, most Private AI models still rely on standard infrastructure that leaves data vulnerable during use. This is a blind spot: the compute itself, the part where the model runs and delivers results can become a leak point. Without deeper protections, fine-tuning models or doing inference can expose data to insiders or infrastructure operators.

This is where Confidential AI changes the game.

Confidential AI secures data in use through Trusted Execution Environments (TEEs), isolating workloads from the rest of the system, even from cloud providers themselves. Unlike Private AI, which often requires custom setups and deep technical overhead, Confidential AI can be simpler to deploy and scale. Modern Confidential AI platforms let enterprises run powerful models without compromising on privacy, and without rewriting their infrastructure from scratch.

Ironically, it’s now easier to use Confidential AI than Private AI. No need to build an entire isolated stack or manage bespoke data silos. With providers like iExec offering plug-and-play solutions built around AI, enterprises can leverage AI securely with minimal friction.

Confidential AI adds cybersecurity guarantees to the AI workflow:

So while Private AI offers a critical first layer of protection, it’s Confidential AI that makes those protections hold up in the real world, especially in highly-regulated sectors like healthcare and finance, or across global AI ecosystems.

If an organization wants to use AI, build an AI, or unlock AI capabilities that are different from public AI, Confidential AI is the only approach that scales without compromise.

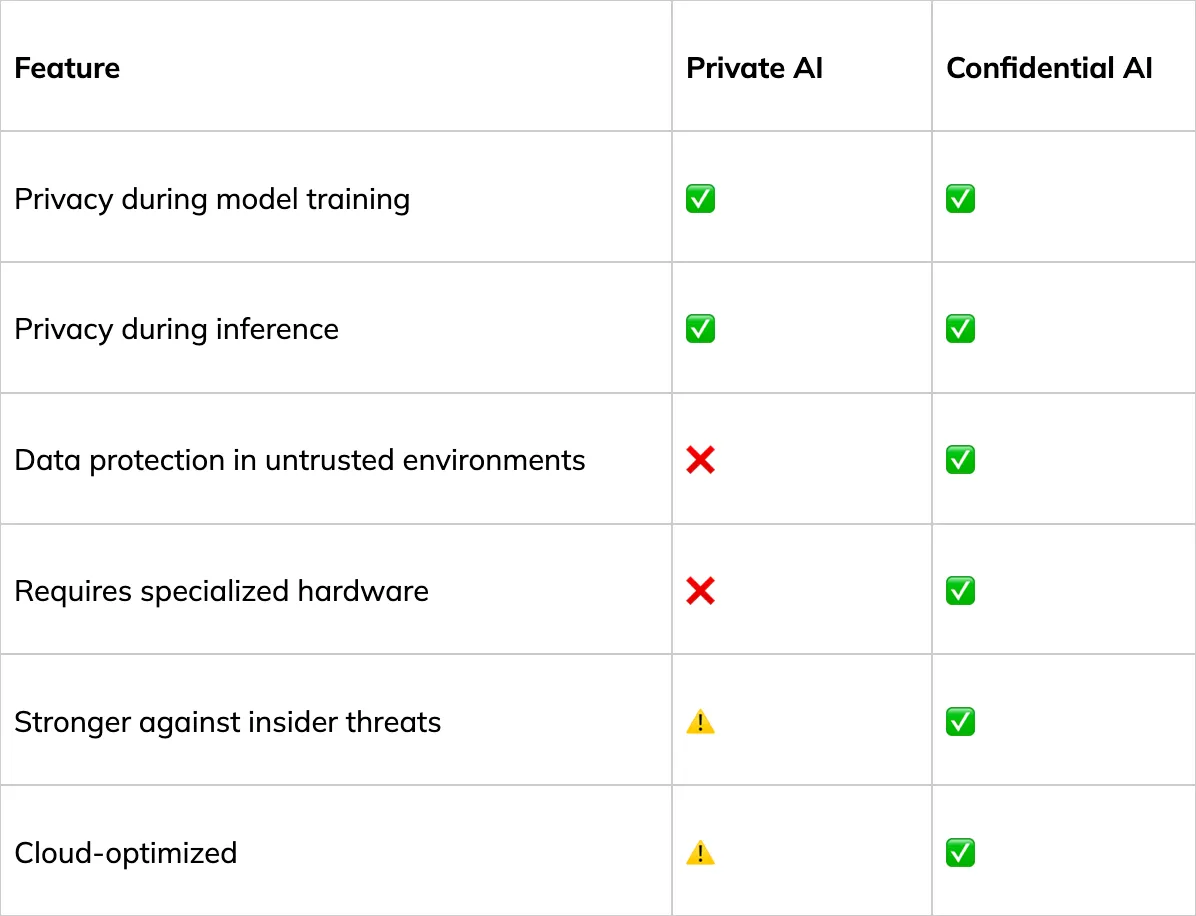

Private AI and Confidential AI are often bundled together, but they address different layers of the privacy stack.

Private AI operates at the software level, using frameworks, encryption techniques, and decentralized learning protocols to keep data private. But it stops short of safeguarding the full LLMS infrastructure.

Like said above, Confidential AI is built on trusted execution environments (TEEs) and protects data in use. This means your dataset, AI model, and inference process are isolated from everything, even the cloud provider itself. TEEs ensure computation happens in an encrypted, tamper-proof bubble.

In essence, Confidential AI elevates Private AI. It adds infrastructure-level guarantees to privacy-preserving AI workflows.

If Confidential AI elevates Private AI, then iExec elevates Private AI. iExec combines Confidential AI with blockchain-based verification so developers can build decentralized, auditable AI services that require zero trust.

Think about deploying a generative AI model in healthcare or finance. With iExec, every stage of the process (from prompt to AI outputs) is secured in TEEs, logged on-chain, and governed by smart contracts. This isn’t just about security. It’s about creating AI environments where trust is enforced.

As a Gold Member of the Intel Partner Alliance, iExec has integrated Intel TDX into its Confidential AI stack. Effortless Confidential AI means a workflow where developers don’t need to rewrite their models or battle complex syscalls. AI workloads run securely, and verifiably, from day one.

Confidential AI unlocks a wide range of secure AI applications across sensitive domains:

One standout is iExec’s Private AI Image Generation, which uses encrypted prompts to generate visuals without storing or reusing user data. Everything is processed in a secure enclave. No logging, no reuse, no surprises.

The future of AI is confidential and private, not public.

Publicly available AI has delivered massive value but comes with real tradeoffs. The need for privacy-first AI infrastructure is no longer a niche concern. It’s the foundation for deploying AI at scale in a way that protects users, safeguards private data, and meets global compliance standards.

Private AI is the critical step to keep data local, decentralized, and under user control. But to truly future-proof your AI operations, Confidential AI is the missing layer: secure AI model training, confidential inference, and infrastructure-level isolation.

iExec is helping define the future of AI by building the AI infrastructure for a decentralized, privacy-first future. A future where powerful AI doesn’t require blind trust.